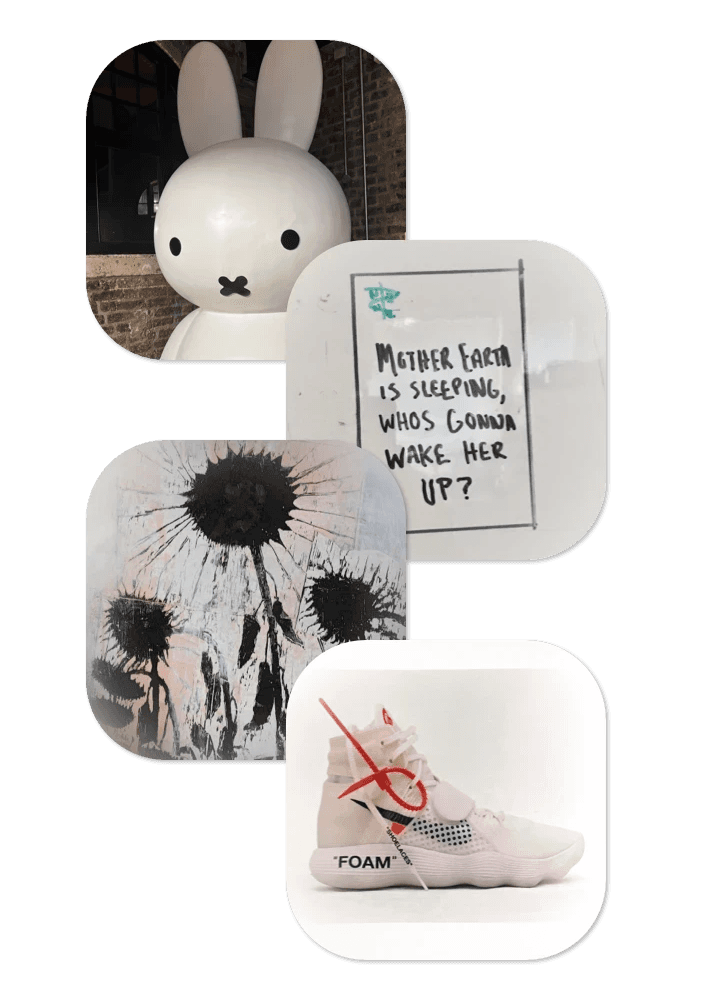

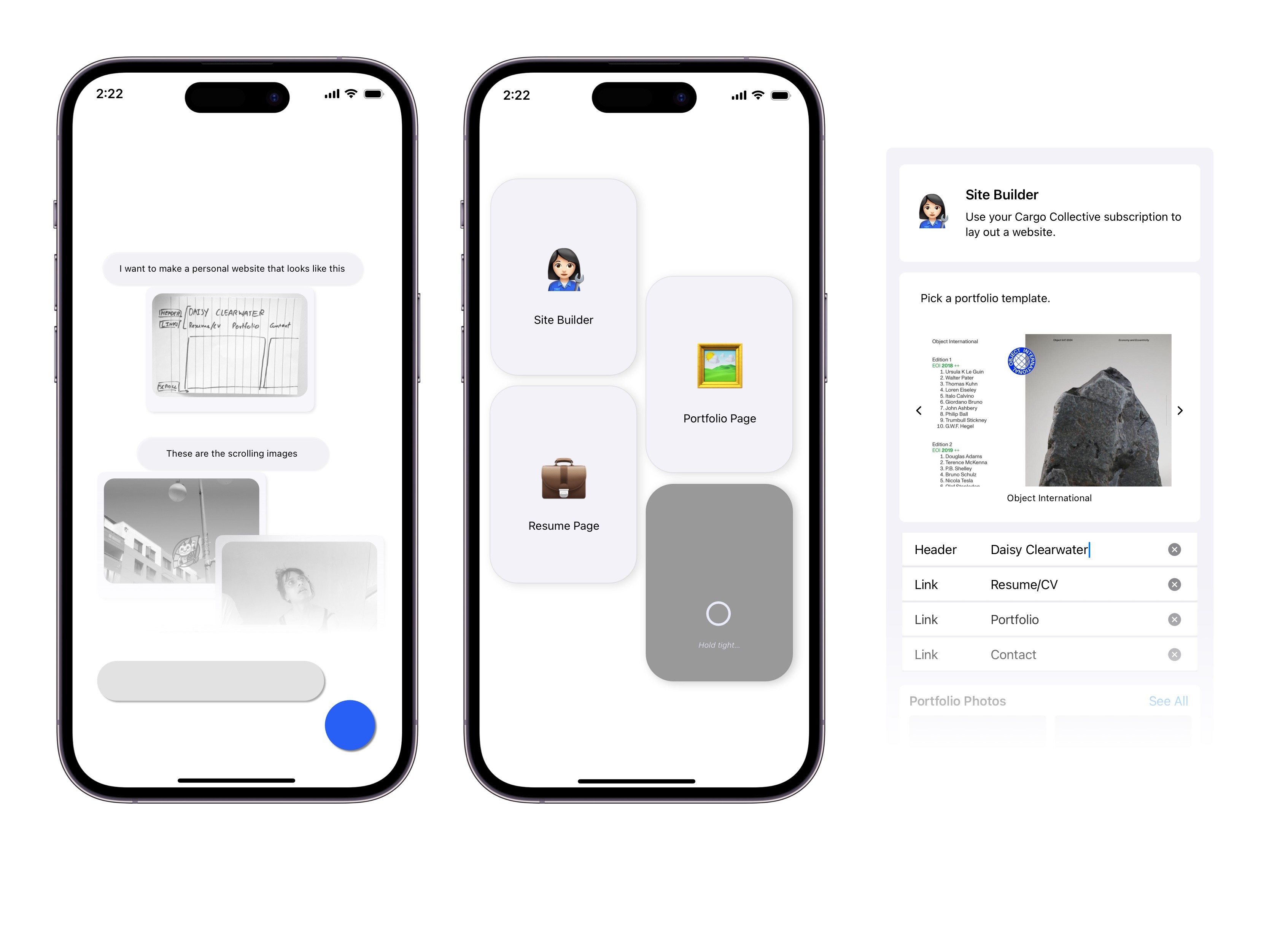

If AI is agnostic to media types and data formats, it's free to see media the way you see it.

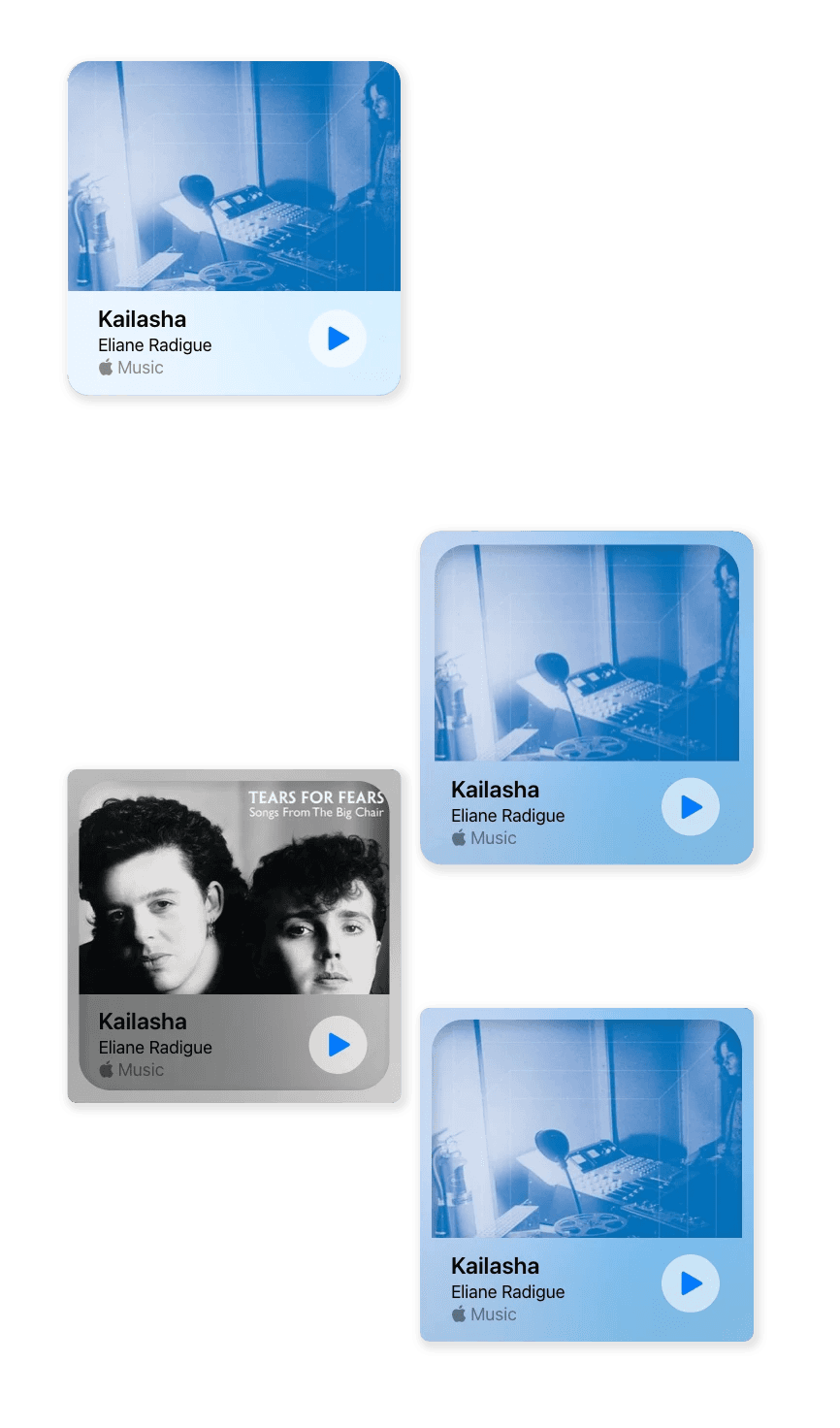

My first brief for this design was to develop a language for media files that is consistent, intuitive, and tempts the user to put it into play.

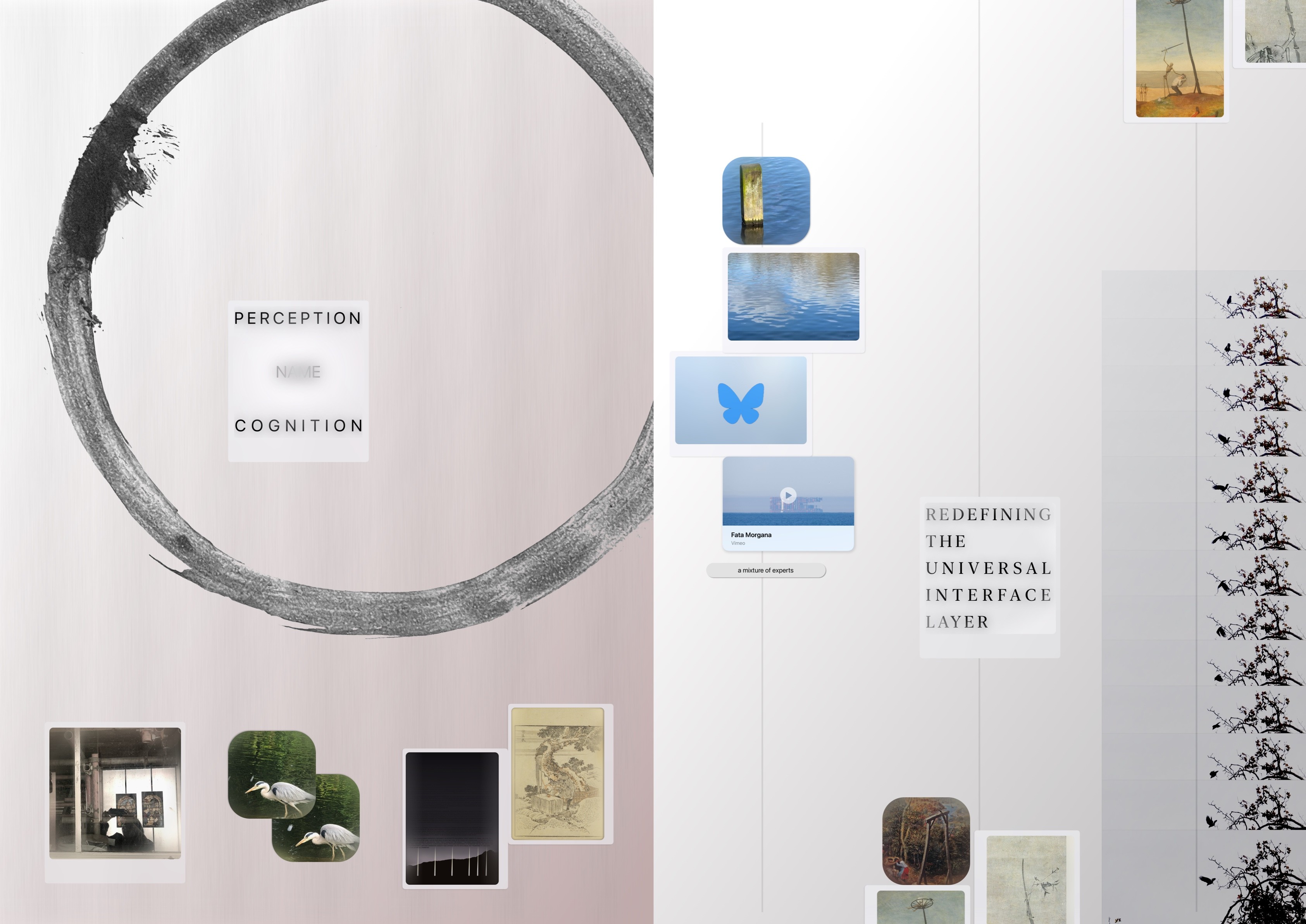

I started by studying the iOS treatment of Universal Links, and then creatively improvised on it. I wanted UI elements with a solidity and pop to them. I focused on a holographic paradigm, with layers of glass.

Not passive views but active affordances, the media objects need to be freely reconfigurable for any step in the creative process. This meant they had to feel realer than life.

The data model, a framework of LLMs in cooperation, is currently being developed at Moon. The usability I developed (which will surely evolve!) set the expectation for the core functionality that the machine learning must provide.